In Google Webmaster everyday there are lots of technical problems occur across the site owners regarding contents like Sitelinks, Robots.txt, Crawl Errors, Removing URLs, Sitemap Submissions, Malware, Sitemap Health, Traffic Source, Search Query etc. But today the most common problem is the crawl error and some of us still on confusion either, does crawl error mean the error which lists errors that prevent Googlebot from accessing a site at all? or lists errors Googlebot encountered when trying to crawl specific URLs. You can search for specific URLs or errors. But in Google Webmaster the error is basically shown the error which Googlebot encounters during indexing your sitemap and this error is for that specific url or nothing.

In addition to listing any URLs the webmaster tool had difficulty crawling, hence they list the type of problem and, where possible, list the page or pages on which they found the error. The most important URLs are listed definitely first.

If you want to remove the specific Crawl Error and make your sitemap fresh to look at and for better indexing and search result showing to Google we'd learn today how to remove Crawl Errors for ever and fix the problem manually in Google Webmaster Tools.

The Entire Procedure

1. Go to https://www.google.com/webmasters/tools2. Now go to your Site Dashboard

3. Over here you could see the specific Crawl Errors of your site

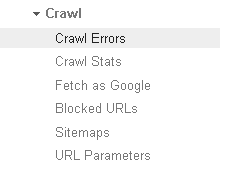

4. Go to Crawl > Crawl Errors

5. Here you could see Top 1,000 pages with errors

6. Click on a link(Every link in this list has error otherwise didn't be shown)

7. A pop up window will appear having details of the page error

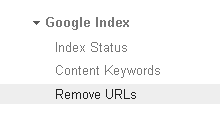

8. Copy the link and open another window having this path Google Index > Remove URLs

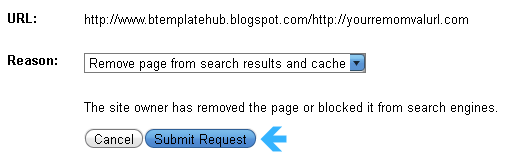

9. Click on Create a New Removal Request button and a box will open

12. Now a message will come which will say the URL has been added for removal

Note: After 3/4 days Googlebot will completely remove the url (you requested) from both search result and crawl pages

What Is Mark As Fixed?

Mark As Fixed is a kind of tool which next to download button on the crawl error page. Many of us hold questions about how Mark As Fixed tool does function, does it remove the url or tell Search engine bot to take any action about the error page, or it resolves the problem automatically?

Actually the thing is that on the Crawl Errors page, you can mark an error for a specific URL as fixed. This tells Google that you no longer want this type of critical issue to appear, and remove the URL from the list. However, until or unless you resolve the underlying issue, the marked crawl error will reappear the next time Googlebot crawls that URL again. So better if you remove the url manually for your better page rank and crawling by Google bot.

0 comments